Chris Nuzum Hyperkult XXV Video | Tripping Up Memory Lane

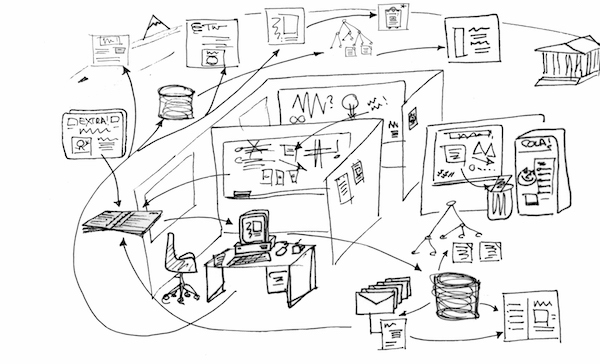

Watch this video of Chris Nuzum's Tripping Up Memory Lane talk at Hyperkult 2015, University of Lüneburg, 10 July 2015. Traction Software CTO and co-founder Chris Nuzum reviews hypertext history, his experience as a hypertext practitioner, and the core principles of Traction TeamPage.

Watch this video of Chris Nuzum's Tripping Up Memory Lane talk at Hyperkult 2015, University of Lüneburg, 10 July 2015. Traction Software CTO and co-founder Chris Nuzum reviews hypertext history, his experience as a hypertext practitioner, and the core principles of Traction TeamPage.

Original Traction Product Proposal

I hope you'll enjoy reading the original Traction Product Proposal, dated October 1997. Many early Traction concepts carried over directly to the Traction® TeamPage product first commercially released in July 2002, but we've also learned a lot since then - as you might hope! The Proposal and its Annotated References may be helpful to students interested in the history and evolution of hypertext.

Tripping Up Memory Lane

Last week I gave a talk at the Hyperkult 2015 conference. It was an honor to present there, especially since it was the 25th and final time the conference was held. This was my proposal for the talk:

My Part Wor ks

About 50 years ago, Andy van Dam joined the Brown University faculty with the world's second PhD in Computer Science (earned at the University of Pennsylvania). Today many of Andy’s friends, faculty, students and former students are celebrating his 50 years at Brown with Stone Age, Iron Age and Machine Age panels. [ June 9, 2015 update: See event video: Celebrate with Andy: 50 Years of Computer Science at Brown University ]

About 50 years ago, Andy van Dam joined the Brown University faculty with the world's second PhD in Computer Science (earned at the University of Pennsylvania). Today many of Andy’s friends, faculty, students and former students are celebrating his 50 years at Brown with Stone Age, Iron Age and Machine Age panels. [ June 9, 2015 update: See event video: Celebrate with Andy: 50 Years of Computer Science at Brown University ]

Enterprise 2.0 - Are we there yet?

Andrew McAfee writes Nov 20, 2014: "Facebook’s recent announcement that it’s readying a version of its social software for workplaces got me thinking about Enterprise 2.

Andrew McAfee writes Nov 20, 2014: "Facebook’s recent announcement that it’s readying a version of its social software for workplaces got me thinking about Enterprise 2.

Why did it take so long? I can think of a few reasons. It’s hard to get the tools right — useful and simple software is viciously hard to make. Old habits die hard, and old managers die (or at least leave the workforce) slowly. The influx of ever-more Millennials has almost certainly helped, since they consider email antediluvian and traditional collaboration software a bad joke.

Whatever the causes, I’m happy to see evidence that appropriate digital technologies are finally appearing to help with the less structured, less formal work of the enterprise. It’s about time.

What do you think? Is Enterprise 2.

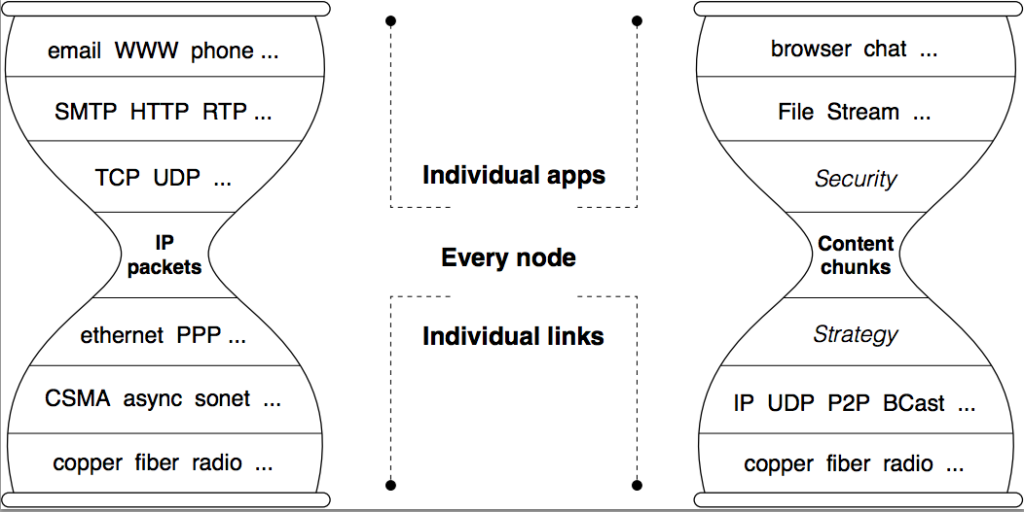

Named Data Networking - Boffin Alert

On Sep 4, 2014 the Named Data Networking project announced a new consortium to carry the concepts of Named Data Networking (NDN) forward in the commercial world. If this doesn't sound exciting, try The Register's take: DEATH TO TCP/IP cry Cisco, Intel, US gov and boffins galore. What if you could use the internet to access content securely and efficiently, where anything you want is identified by name rather than by its internet address? The NDN concept is technically sweet, gaining traction, and is wonderfully explained and motivated in a video by its principle inventor and instigator Van Jacobson. Read on for the video, a few quotes, reference links, and a few thoughts on what NDN could mean for the Internet of Things, Apple, Google and work on the Web. Short version: Bring popcorn.

On Sep 4, 2014 the Named Data Networking project announced a new consortium to carry the concepts of Named Data Networking (NDN) forward in the commercial world. If this doesn't sound exciting, try The Register's take: DEATH TO TCP/IP cry Cisco, Intel, US gov and boffins galore. What if you could use the internet to access content securely and efficiently, where anything you want is identified by name rather than by its internet address? The NDN concept is technically sweet, gaining traction, and is wonderfully explained and motivated in a video by its principle inventor and instigator Van Jacobson. Read on for the video, a few quotes, reference links, and a few thoughts on what NDN could mean for the Internet of Things, Apple, Google and work on the Web. Short version: Bring popcorn.

Linked, Open, Heterogeneous

-DDegler-Apr2014-500w.png) Art, Data, and Business Duane Degler of Design For Context posted slides from his 5 April 2014 Museums and the Web talk, Design Meets Data (Linked, Open, Heterogeneous). Degler addresses what he calls the LAM (Libraries, Archives, Museums) Digital Information Ecosystem. I believe the same principles apply when businesses connect internal teams, external customers, external suppliers, and partners of all sorts as part of their Business Information Ecosystem. Read Degler's summary and slides, below:

Art, Data, and Business Duane Degler of Design For Context posted slides from his 5 April 2014 Museums and the Web talk, Design Meets Data (Linked, Open, Heterogeneous). Degler addresses what he calls the LAM (Libraries, Archives, Museums) Digital Information Ecosystem. I believe the same principles apply when businesses connect internal teams, external customers, external suppliers, and partners of all sorts as part of their Business Information Ecosystem. Read Degler's summary and slides, below:

Thought Vectors - Ted Nelson: Art not Technology

The technoid vision, as expressed by various pundits of electronic media, seems to be this: tomorrow's world will be terribly complex, but we won't have to understand it. Fluttering though halestorms of granular information, ignorant like butterflies, we will be guided by smell, or Agents, or leprechauns, to this or that pretty picture, or media object, or factoid. If we have a Question, it will be possible to ask it in English. Little men and bunny rabbits will talk to us from the computer screen, making us feel more comfortable about our delirious ignorance as we flutter through this completely trustworthy technological paradise about which we know less and less.

The technoid vision, as expressed by various pundits of electronic media, seems to be this: tomorrow's world will be terribly complex, but we won't have to understand it. Fluttering though halestorms of granular information, ignorant like butterflies, we will be guided by smell, or Agents, or leprechauns, to this or that pretty picture, or media object, or factoid. If we have a Question, it will be possible to ask it in English. Little men and bunny rabbits will talk to us from the computer screen, making us feel more comfortable about our delirious ignorance as we flutter through this completely trustworthy technological paradise about which we know less and less.

Thought Vectors - What Motivated Doug Engelbart

By "augmenting human intellect" we mean increasing the capability of a man to approach a complex problem situation, to gain comprehension to suit his particular needs, and to derive solutions to problems. Increased capability in this respect is taken to mean a mixture of the following: more-rapid comprehension, better comprehension, the possibility of gaining a useful degree of comprehension in a situation that previously was too complex, speedier solutions, better solutions, and the possibility of finding solutions to problems that before seemed insoluble. And by "complex situations" we include the professional problems of diplomats, executives, social scientists, life scientists, physical scientists, attorneys, designers--whether the problem situation exists for twenty minutes or twenty years. We do not speak of isolated clever tricks that help in particular situations. We refer to a way of life in an integrated domain where hunches, cut-and-try, intangibles, and the human "feel for a situation" usefully co-exist with powerful concepts, streamlined terminology and notation, sophisticated methods, and high-powered electronic aids. 1a1

By "augmenting human intellect" we mean increasing the capability of a man to approach a complex problem situation, to gain comprehension to suit his particular needs, and to derive solutions to problems. Increased capability in this respect is taken to mean a mixture of the following: more-rapid comprehension, better comprehension, the possibility of gaining a useful degree of comprehension in a situation that previously was too complex, speedier solutions, better solutions, and the possibility of finding solutions to problems that before seemed insoluble. And by "complex situations" we include the professional problems of diplomats, executives, social scientists, life scientists, physical scientists, attorneys, designers--whether the problem situation exists for twenty minutes or twenty years. We do not speak of isolated clever tricks that help in particular situations. We refer to a way of life in an integrated domain where hunches, cut-and-try, intangibles, and the human "feel for a situation" usefully co-exist with powerful concepts, streamlined terminology and notation, sophisticated methods, and high-powered electronic aids. 1a1

Reinventing the Web II

Updated 19 Jun 2016 Why isn't the Web a reliable and useful long term store for the links and content people independently create? What can we do to fix that? Who benefits from creating spaces with stable, permanently addressable content? Who pays? What incentives can make Web scale permanent, stable content with reliable bidirectional links and other goodies as common and useful as Web search over the entire flakey, decentralized and wildly successful Web? Here's a good Twitter conversation to read:

Updated 19 Jun 2016 Why isn't the Web a reliable and useful long term store for the links and content people independently create? What can we do to fix that? Who benefits from creating spaces with stable, permanently addressable content? Who pays? What incentives can make Web scale permanent, stable content with reliable bidirectional links and other goodies as common and useful as Web search over the entire flakey, decentralized and wildly successful Web? Here's a good Twitter conversation to read:

60% of my fav links from 10 yrs ago are 404. I wonder if Library of Congress expects 60% of their collection to go up in smoke every decade.

— Bret Victor (@worrydream) June 15, 2014

Thought Vectors - Vannevar Bush and Dark Matter

On Jun 9 2014 Virginia Commonwealth University launched a new course, UNIV 200: Inquiry and the Craft of Argument with the tagline Thought Vectors in Concept Space. The eight week course includes readings from Vannevar Bush, J.

On Jun 9 2014 Virginia Commonwealth University launched a new course, UNIV 200: Inquiry and the Craft of Argument with the tagline Thought Vectors in Concept Space. The eight week course includes readings from Vannevar Bush, J.

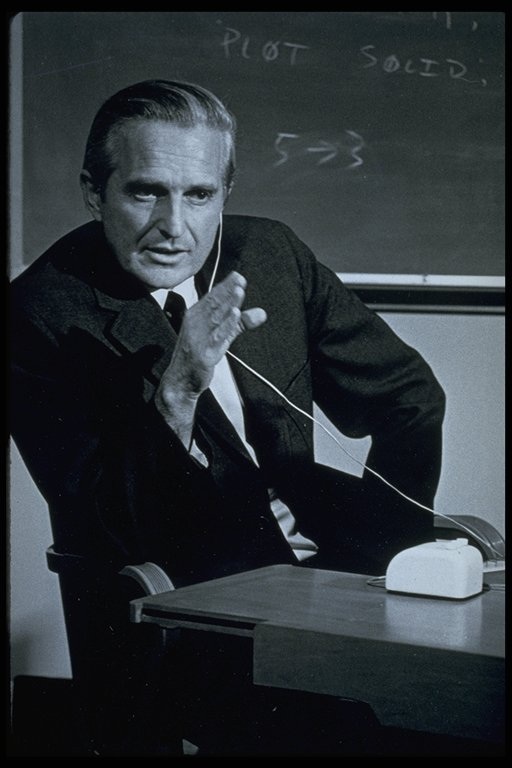

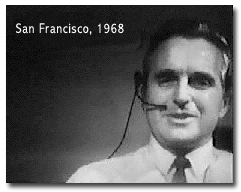

Remembering Doug Engelbart, 30 January 1925 - 2 July 2013

I was very sad to learn that Doug Engelbart passed away at his home on 2 July 2013. Doug had a long life as a visionary engineer, inventor, and pioneer of technology we use every day - and technology where we're just starting to catch up to Doug and his SRI team in 1968. Doug had a quiet, friendly, and unassuming nature combined with deep knowledge, iron will, and a determination to pursue his vision. His vision was to aid humanity in solving complex, difficult and supremely important problems; Doug's goals were noble and selfless. The sense of dealing with an Old Testament prophet - a kindly Moses - is perhaps the greatest loss I and countless others who have met and been inspired by Doug feel today. I've written frequently about Doug in the past, and I'll continue to do so. Here are a few remembrances and resources that seem appropriate. I'll update this list over the next several days. Farewell Doug and my sincere condolences to his family and many friends.

I was very sad to learn that Doug Engelbart passed away at his home on 2 July 2013. Doug had a long life as a visionary engineer, inventor, and pioneer of technology we use every day - and technology where we're just starting to catch up to Doug and his SRI team in 1968. Doug had a quiet, friendly, and unassuming nature combined with deep knowledge, iron will, and a determination to pursue his vision. His vision was to aid humanity in solving complex, difficult and supremely important problems; Doug's goals were noble and selfless. The sense of dealing with an Old Testament prophet - a kindly Moses - is perhaps the greatest loss I and countless others who have met and been inspired by Doug feel today. I've written frequently about Doug in the past, and I'll continue to do so. Here are a few remembrances and resources that seem appropriate. I'll update this list over the next several days. Farewell Doug and my sincere condolences to his family and many friends.

Intertwingled Work

Last week's post by Jim McGee Managing the visibility of knowledge work kicked off a nice conversation on Observable Work (using a term introduced by Jon Udell) including: my blog post expanding on a comment I wrote on Jim's post; Brian Tullis's Observable Work: The Taming of the Flow based on a comment Brian made on Jim's post, which he found from a Twitter update by @jmcgee retweeted by @roundtrip; a Twitter conversation using the hash tag #OWork (for "Observable Work"); John Tropea's comment back to Jim from a link in a comment I left on John's Ambient Awareness is the new normal post; Jim's Observable work - more on knowledge work visibility (#owork), linking back to Mary Abraham's TMI post and Jack Vinson's Invisible Work - spray paint needed post, both written in response to Jim's original post; followed by Jack Vinson's Explicit work (#owork) and Paula Thornton's Enterprise 2.0 Infrastructure for Synchronicity.

Last week's post by Jim McGee Managing the visibility of knowledge work kicked off a nice conversation on Observable Work (using a term introduced by Jon Udell) including: my blog post expanding on a comment I wrote on Jim's post; Brian Tullis's Observable Work: The Taming of the Flow based on a comment Brian made on Jim's post, which he found from a Twitter update by @jmcgee retweeted by @roundtrip; a Twitter conversation using the hash tag #OWork (for "Observable Work"); John Tropea's comment back to Jim from a link in a comment I left on John's Ambient Awareness is the new normal post; Jim's Observable work - more on knowledge work visibility (#owork), linking back to Mary Abraham's TMI post and Jack Vinson's Invisible Work - spray paint needed post, both written in response to Jim's original post; followed by Jack Vinson's Explicit work (#owork) and Paula Thornton's Enterprise 2.0 Infrastructure for Synchronicity.

Doug Engelbart | 85th Birthday Jan 30, 2010

"DOUG Engelbart sat under a twenty-two-foot-high video screen, "dealing lightning with both hands." At least that's the way it seemed to Chuck Thacker, a young Xerox PARC computer designer who was later shown a video of the demonstration that changed the course of the computer world." from What the Dormouse Said, John Markoff.

"DOUG Engelbart sat under a twenty-two-foot-high video screen, "dealing lightning with both hands." At least that's the way it seemed to Chuck Thacker, a young Xerox PARC computer designer who was later shown a video of the demonstration that changed the course of the computer world." from What the Dormouse Said, John Markoff.

Enterprise 2.0 Schism

I have to confess that I've enjoyed watching recent rounds of Enterprise 2.

I have to confess that I've enjoyed watching recent rounds of Enterprise 2.

Reinventing the Web

John Markoff wrote a really good Jan 11 2009 New York Times profile, In Venting, a Computer Visionary Educates on Ted Nelson and his new book, Geeks Bearing Gifts: How the Computer World Got This Way (available on Lulu.

Enterprise 2.0 - Letting hypertext out of its box

In his Mar 26, 2006 post, Putting Enterprise 2.

In his Mar 26, 2006 post, Putting Enterprise 2.

Traction Roots - Doug Engelbart

The source of the term Journal for the Traction TeamPage database is Douglas Engelbart's NLS system (later renamed Augment), which Doug developed in the 1960's as one of the first hypertext systems. Traction's time ordered database, entry + item ID addressing, and many Traction concepts were directly inspired by Doug's work. I'd also claim that Doug's Journal is the first blog - dating from 1969.

Tricycles vs. Training Wheels

In Infoworld, Jon Udell writes When it comes to increasing human productivity, user interfaces aren't one size fits all and cites Doug Engelbart:

I18N ERROR: @tsiskin#footer_RSS_Feed

I18N ERROR: @tsiskin#footer_RSS_Feed